Overview

Recently, I was working with one of our customers and ran into a rather unique requirement and an uncommon constraint. The customer - Storm Petrel - has designed a grant management system called Tempest. This system is designed to aid local municipalities when applying for FEMA grants after a natural disaster occurs. As one can imagine, there is a lot of old fashioned paperwork when it comes to managing such a thing.

Thus, the requirement called for the ability to upload and store scanned documents. No OCR or anything like that, but rather invoices and receipts so that a paper trail of the work done and associated billing activity can be preserved. For APEX, this can be achieved without breaking a sweat, as the declarative BLOB feature can easily upload a file and store it in a BLOB column of a table, complete with filename and MIME type.

However, the tablespace storage costs from the hosting company for the anticipated volume of documents was considerable. So much so that the cost would have to be factored into the price of the solution for each customer, making it more expensive and obviously less attractive.

My initial thought was to use Amazon’s S3 storage solution, since the costs of storing 1GB of data for a month is literally 3 cents. Data transfer prices are also ridiculously inexpensive, and from what I have seen via marketing e-mails, the price of this and many of Amazon’s other AWS services have been on a downward trend for some time.

The next challenge was to figure out how to get APEX integrated with S3. I have seen some of the AWS API documentation, and while there are ample examples for Java, .NET and PHP, there is nothing at all for PL/SQL. Fortunately, someone else has already done the heavy lifting here: Morten Braten & Jeffrey Kemp.

Morten’s Alexandria PL/SQL Library is an amazing open-source suite of PL/SQL utilities which provide a number of different services, such as document generation, data integration and security. Jeff Kemp has a presentation on SlideShare that best covers the breadth of this utility. You can also read about the latest release - 1.7 - on Morton’s blog here. You owe it to yourself to check out this library whether or not you have any interest in AWS S3!

In this latest release of the library, Jeff Kemp has added a number of enhancements to the S3 integration piece of the framework, making it quite capable of managing files on S3 via a set of easy to use PL/SQL APIs. And these APIs can be easily & securely integrated into APEX and called from there. He even created a brief presentation that describes the S3 APIs.

Configuring AWS Users and Groups

So let’s get down to it. How does all of this work with APEX? First of all, you will need to create an AWS account. You can do this by navigating to http://aws.amazon.com/ and clicking on Sign Up. The wizard will guide you through the account creation process and collect any relevant information that it needs. Please note that you will need to provide a valid credit card in order to create an AWS account, as they are not free, depending on which services you choose to use.

Once the AWS account is created, the first thing that you should consider doing is creating a new user that will be used to manage the S3 service. The credentials that you use when logging into AWS are similar to root, as you will be able to access and manage and of the many AWS services. When deploying only S3, it’s best to create a user that can only do just that.

To create a new user:

1) Click on the Users tab

2) Click Create New User

3) Enter the User Name(s) and click Create. Be sure that Generate an access key for each User is checked.

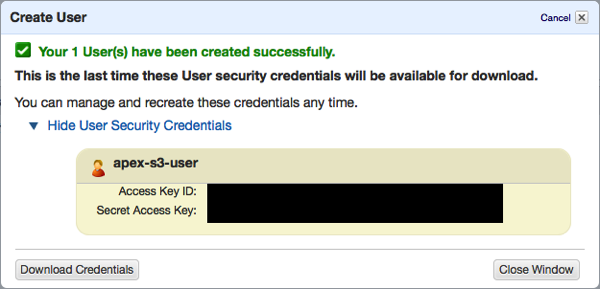

Once you click Create, another popup region will be displayed. Do not close this window! Rather, click on Show User Security Credentials to display the Access Key ID and Secret Access Key ID. Think of the Access Key ID as a username and the Secret Access Key ID as a password, and then treat them as such.

For ease of use, you may want to click Download Credentials and save your keys to your PC.

The next step is to create a Group that your new user will be associated with. The Group in AWS is used to map a user or users to a set of permissions. In this case, we will need to allow our user to have full access to S3, so we will have to ensure that the permissions allow for this. In your environment, you may not want to grant as many privileges to a single user.

To create a new group:

1) Click on the Groups tab

2) Click on Create New Group

3) Enter the Group Name, such as S3-Admin, and click Continue

The next few steps may vary depending on which privileges you want to assign to this group. The example will assume that all S3 privileges are to be assigned.

4) Select Policy Generator, and then click on the Select button.

5) Set the AWS Service drop down to Amazon S3.

6) Select All Actions (*) for the Actions drop down.

7) Enter arn:aws:s3:::* for the Amazon Resource Name (ARN) and click Add Statement. This will allow access to any S3 resource. Alternatively, to create a more restricted group, a bucket name could have been specified here, limiting the users in this group to only be able to manage that specific bucket.

8) Click Continue.

9) Optionally rename the Policy Name to something a little less cryptic and click Continue.

10) Click Create group to create the group.

The animation below illustrates the previous steps:

Next, we’ll add our user to the newly created group.

1) Select the group that was just created by checking the associated checkbox.

2) Under the Users tab, click Add Users to Group.

3) Select the user that you want to add and then click Add Users.

The user should now be associated with the group.

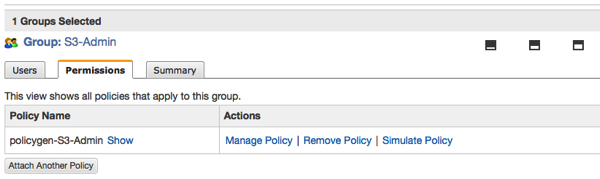

Select the Permissions tab to verify that the appropriate policy is associated with the user.

At this point, the user management portion of AWS is complete.

Configuring AWS S3

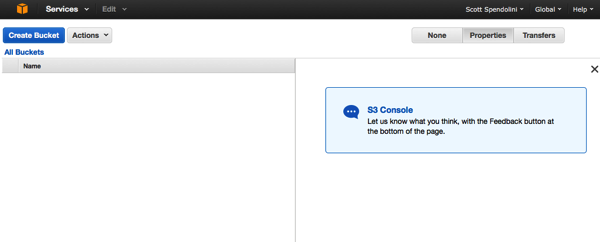

The next step is to configure the S3 portion. To do this, navigate to the S3 Dashboard:

1) Click on the Services tab at the top of the page.

2) Select S3.

You should see the S3 dashboard now:

S3 uses “buckets" to organize files. A bucket is just another word for a folder. Each of these buckets have a number of different properties that can be configured, making the storage and security options quite extensible. While there is a limit of 100 buckets per AWS account, buckets can contain folders, and when using the AWS APIs, its fairly easy to provide a layer of security based on a file’s location within a bucket.

Let’s start out by creating a bucket and setting up some of the options.

1) Click on Create Bucket.

2) Enter a Bucket Name and select the Region closest to your location and click Create. One thing to note - the Bucket Name must be unique across ALL of AWS. So don’t even try demo, test or anything like that.

3) Once your bucket is created, click on the Properties button.

I’m not going to go through all of the properties of a bucket in detail, as there are plenty of other places that already have that covered. Fortunately, for our purposes, the default settings on the bucket should suffice. It is worth taking a look at these settings, as many of them - such as Lifecycle and Versioning - can definitely come in handy and reduce your development and storage costs.

Next, let’s add our first file to the bucket. To do this:

1) Click on the Bucket Name.

2) Click on the Upload button.

3) A dialog box will appear. To add a file or files, click Add Files.

4) Using the File Upload window, select a file that you wish to upload. Select it and click Open.

5) Click Start Upload to initiate the upload process.

Depending on your file size, the transfer will take anywhere from a second to several minutes. Once it’s complete, your file should be visible in the left side of the dashboard.

6) Click on the recently uploaded file.

7) Click on the Properties button.

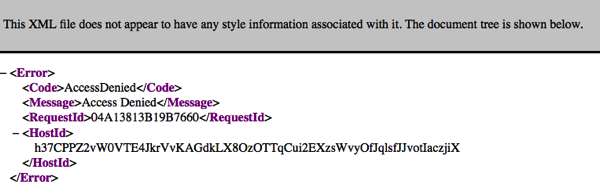

Notice that there is a link to the file displayed in the Properties window. Click on that link. You’re probably looking at something like this now:

That is because by default, all files uploaded to S3 will be secured. You will need to call an AWS API to generate a special link in order to access them. This is important for a couple of reasons. First off, you clearly don’t want just anyone accessing your files on S3. Second, even if securing files is not a major concern, keep in mind that S3 also charges for data transfer. Thus, if you put a large public file on S3, and word gets out as to its location, charges can quickly add up as many people access that file. Fortunately, securely accessing files on S3 from APEX is a breeze with the Alexandria PL/SQL libraries. More on that shortly.

If you want to preview any file in S3, simply right-click on it and select Open or Download. This is also how you rename and delete files in S3. And only authorized AWS S3 users will be able to perform these tasks, as the S3 Dashboard requires a valid AWS account.

Installing AWS S3 PL/SQL Libraries

However, they can also be installed into a centralized schema and then made available to other schemas that need to use them.

There are eight files that need to be installed, as well as a one-off command.

1) First, connect to your APEX parse-as schema and run the following script:

create type t_str_array as table of varchar2(4000) /

To install these packages, run the following four scripts as your APEX parse-as schema:

/plsql-utils-v170/ora/http_util_pkg.pks

/plsql-utils-v170/ora/http_util_pkg.pkb

/plsql-utils-v170/ora/debug_pkg.pkb

/plsql-utils-v170/ora/debug_pkg.pkb

3) Edit the file amazon_aws_auth_pkg.pkb in a text editor.

4) Near the top of the file are three global variable declarations: g_aws_id, g_aws_key and g_gmt_offset. Set the values of these three variables to the Access Key ID, Secret Key ID and GMT offset. These values were displayed and/or downloaded when you created your AWS user. If you did not record these, you will have to create a new pair back in the User Management dashboard.

g_aws_id varchar2(20) := 'XXXXXXXXXXXXXXXXXXX'; -- AWS Access Key ID g_aws_key varchar2(40) := 'XXXXXXXXXXXXXXXXXXX'; -- AWS Secret Key g_gmt_offset number := 4; -- your timezone GMT adjustment (EST = 4, CST = 5, MST = 6, PST = 7)

5) Once the changes to amazon_aws_auth_pkg.pkb are made, save the file.

6) Next, run the following four SQL scripts in the order below as your APEX parse-as schema:

/plsql-utils-v170/ora/amazon_aws_auth_pkg.pks

/plsql-utils-v170/ora/amazon_aws_auth_pkg.pkb

/plsql-utils-v170/ora/amazon_aws_s3_pkg.pks

/plsql-utils-v170/ora/amazon_aws_s3_pkg.pks

IMPORTANT NOTE: The S3 packages in their current form do not offer support for SSL. This is a big deal, since any request that is made to S3 will be done in the clear, putting the contents of your files at risk as they are transferred to and from S3. There is a proposal on the Alexandria Issues Page that details this deficiency.

I have made some minor alterations to the AMAZON_AWS_S3_PKG package which accommodate using SSL and Oracle Wallet when calling S3. You can download it from here. When using this version, there are three additional package variables that need to be altered:

g_orcl_wallet_path constant varchar2(255) := 'file:/path_to_dir_with_oracle_wallet';

g_orcl_wallet_pw constant varchar2(255) := 'Oracle Wallet Password';

g_aws_url_http constant varchar2(255) := 'https://'; -- Set to either http:// or https://

Configuring the ACL

BEGIN

DBMS_NETWORK_ACL_ADMIN.CREATE_ACL

(

acl => 'apex-s3.xml',

description => 'ACL for APEX-S3 to access Amazon S3',

principal => 'APEX_S3',

is_grant => TRUE,

privilege => 'connect'

);

DBMS_NETWORK_ACL_ADMIN.ASSIGN_ACL

(

acl => 'apex-s3.xml',

host => '*.amazonaws.com',

lower_port => 80,

upper_port => 80

);

COMMIT;

END;

/

Integration with APEX

SELECT * FROM table (amazon_aws_s3_pkg.get_object_tab(p_bucket_name => 'apex-s3-integration'))

This query will return all files that are stored in the bucket apex-s3-integration, as shown below:

If you see the file that you previously uploaded, then everything is working as it should!

SELECT

key,

size_bytes,

last_modified,

amazon_aws_s3_pkg.get_download_url

(

p_bucket_name => 'apex-s3-integration',

p_key => key,

p_expiry_date => SYSDATE + 1

) download,

key delete_doc

FROM

table (amazon_aws_s3_pkg.get_object_tab(p_bucket_name => 'apex-s3-integration'))

amazon_aws_s3_pkg.delete_object

(

p_bucket_name => 'apex-s3-integration',

p_key => :P1_KEY

);

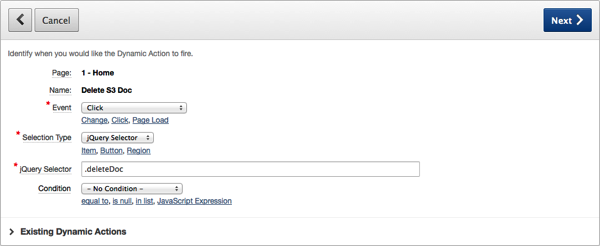

Enter P1_KEY for Page Items to Submit and click Create.

FOR x IN (SELECT * FROM wwv_flow_files WHERE name = :P2_DOC)

LOOP

-- Create the file in S3

amazon_aws_s3_pkg.new_object

(

p_bucket_name => 'apex-s3-integration',

p_key => x.filename,

p_object => x.blob_content,

p_content_type => x.mime_type

);

END LOOP;

-- Remove the doc from WWV_FLOW_FILES

DELETE FROM wwv_flow_files WHERE name = :P2_DOC;

Comments

Just a quick comment - in your overview, you mentioned to store 1TB of data it is 3 cents/month. I understood this to be 3 cents per GB up to the first TB.

Some adjustments I made to the package(s).

- Rather than using the g_gmt_offset variable, I adjusted the function (get_date_string) that returns the current date to return it in UTC format without the dependency of g_gmt_offset being accurate: to_char(sys_extract_utc(systimestamp), 'Dy, DD Mon YYYY HH24:MI:SS', 'NLS_DATE_LANGUAGE = AMERICAN') || ' GMT'.

- Enabling server side encryption. In the assignment of l_auth_string in the new_object procedure, pass in the header: x-amz-server-side-encryption: AES256 and extend l_header_names and l_header_values arrays to pass in x-amz-server-side-encryption and AES256 respectively.

- Enabling reduced redundancy storage - for non prod systems it's probably not necessary to use the standard storage class. In the assignment of l_auth_string in the new_object procedure, pass in the header: x-amz-storage-class: STANDARD or REDUCED_REDUNDANCY and extend l_header_names and l_header values arrays to pass in x-amz-storage-class and STANDARD or REDUCED_REDUNDANCY respectively.

Thanks for your feedback; I've updated the post to reflect the accurate pricing information.

Your other suggestions touch on some additional capabilities of S3; encryption and redundancy. Both of these should be considered for sensitive production data, as they offer additional protection, especially when used together.

Thanks,

- Scott -

I set this up and it's working fine, with one problem.

I've created a folder in the bucket using Greek characters and when retrieving the list those characters are garbage.

I'm using Oracle XE 10g with NLS_CHARACTERSET = AL32UTF8. Any ideas how this could work?

Use this URL: https://s3.amazonaws.com/bucket_name/foo

Even though the access is blocked, you should be able to get the certificate from that page.

Thanks,

- Scott -

- Scott -

My APEX instance is hosted at Enciva.com and is currently Oracle 11. The GitHub issue comments mentions that the Oracle 12c dbms_crypto package has added HMAC_SH256 support. I can ask Enciva to upgrade my instance to Oracle 12c, but was wondering what else I would need to do to make this work? Not sure I have the skills or patience for a major change to the packages that Morten and you have so generously provided.

Did you also run into this issue? Find a solution?

Upgrading to 12c is likely the best approach. There is a patch that will add the new cyphers to 11g as well, but it took quite a while for us to get that to work on another instance. You are welcome to pursue that path as well, but you'll have to work with Oracle Support to get the patch applied.

- Scott -

I installed the AWS CLI onto my laptop and can list the document using the same IAM user and bucket name. I would LOVE to get this working, so any help would be appreciated.